Harnessing the Power of Open and Cost-Effective Data Solutions

Introduction

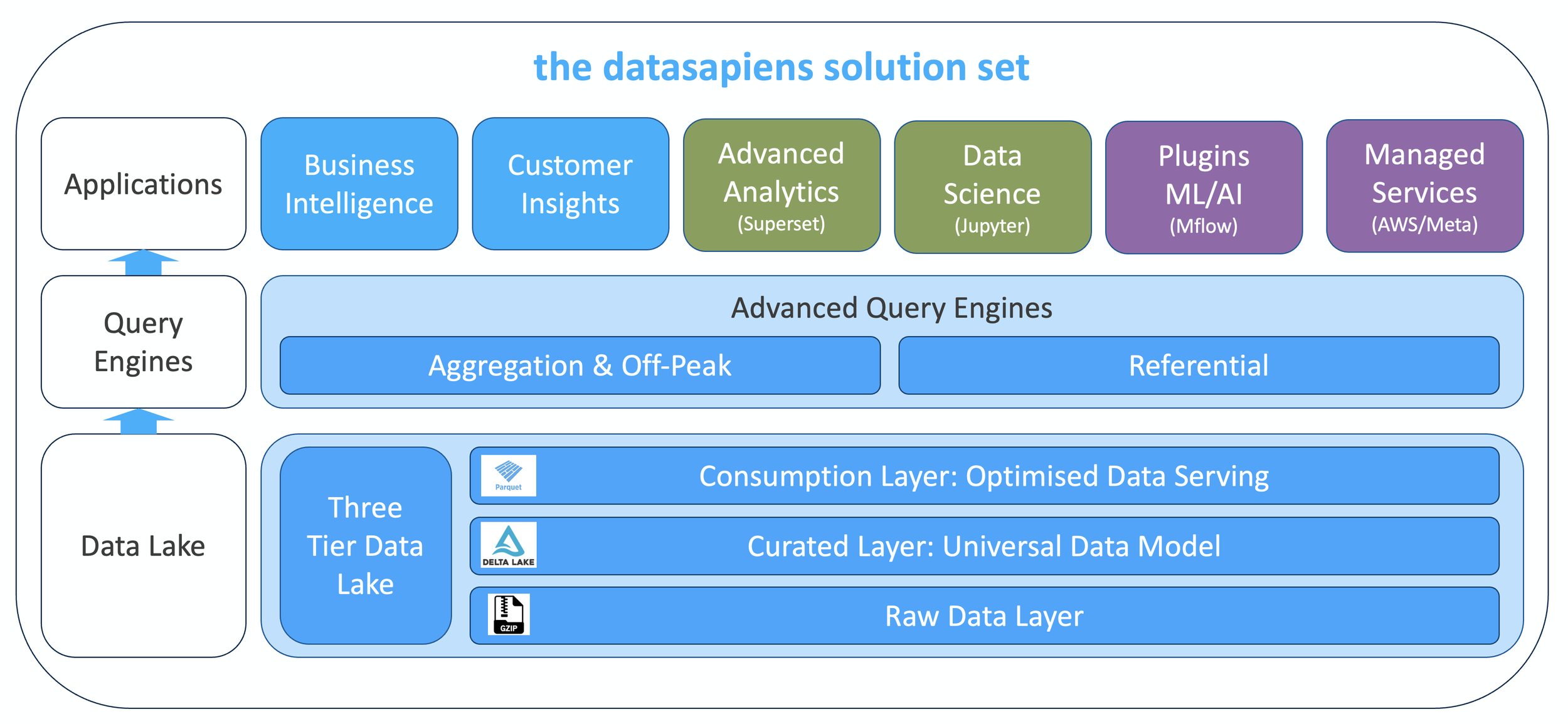

In today’s data-driven landscape, organisations require data management solutions that are robust, scalable, flexible, and economically viable. datasapiens' three-tier Data Lake architecture exemplifies these characteristics, offering an advanced blueprint for effective data utilisation. This blog delves into the core features of our Data Lake framework, emphasising its openness, customisable architecture, cost efficiency, and readiness for technological evolution. This architecture serves as the backbone of our current offerings and forms the base for the applications we will explore in our next blog post.

1. Open and Transparent Infrastructure

Our Data Lake is fundamentally open, constructed with open-source technologies that provide numerous strategic advantages:

Community-Driven Innovation: Leveraging open-source tools taps into global innovations from a community of developers, ensuring our technology stays ahead of the curve.

No Vendor Lock-In: Open-source platforms allow organisations to switch solutions as business needs evolve without prohibitive costs or dependencies.

Transparency and Trust: Open-source software allows users to inspect and adapt the source code, enhancing trust and confidence in its security and operational capabilities.

2. Flexibility Tailored to Organizational Needs

Our Data Lake’s flexible design can be customised to meet the specific data handling requirements of any organisation:

Scalable and Adaptable: Efficiently handles and integrates structured and unstructured data with existing IT infrastructures.

Modular and Upgradable: Supports easy component upgrades—such as replacing Hadoop with Apache Spark—without overhauling the entire system.

3. Freedom from Lock-In

Our framework is designed to provide the agility needed to adapt and innovate freely:

Modular Design: Allows independent updates of components as newer technologies become available, protecting investments and minimising disruptions.

Continuous Integration and Delivery (CI/CD): Ensures updates are seamlessly integrated, maintaining optimal system performance and minimal downtime.

4. Cost-Effectiveness Through Advanced Technologies

We deploy cutting-edge technologies to keep our Data Lake framework economical:

Infrastructure Efficiency: Uses cloud-agnostic platforms and open-source technologies like Delta Lake and Apache Spark to reduce costs.

Regular Technological Updates: Continuously integrates the latest, most efficient technologies to maintain low operational costs and high performance.

5. Component-Specific Highlights

File Formats and Table Management: Utilizes Parquet for file formatting and Delta for table management, enhancing data retrieval and storage efficiency.

Computational Frameworks: Employs Apache Spark and Trino for computational needs, ensuring powerful processing and scalability.

AI/ML Integration: Incorporates MLflow with Python and R environments to support advanced analytics and machine learning capabilities.

6. Security and Infrastructure Architecture

Our infrastructure is strategically designed to ensure security and high performance:

Robust Security Measures: Maintain separate production and non-production environments to ensure compliance and operational integrity.

Advanced Software Integration: Integrates sophisticated software stacks to meet diverse computing needs and complex data workflows, providing scalable and secure data solutions.

Conclusion

At datasapiens, we offer more than just technology; we provide a transformational platform that aligns with your strategic data goals. Our open, flexible, and cost-effective solutions enable total leverage of your data assets to drive innovation and maintain competitive advantage. As we evolve with technology, our commitment to adapting and enhancing our solutions prepares you to meet future challenges head-on.

This Data Lake architecture is just the starting point. In our next blog post, we will delve into the specific applications built on this foundation, exploring how they drive business value and foster data-driven decision-making.